Table of contents

Reading time: 20 minutes

For any search engine (PS), it is important to promptly provide a response to a user request.

For this, the PS uses a complex algorithm responsible for collecting and analyzing information, after which, if the information is of high quality, it puts the site in the TOP position.

In order for the site to be in the top lines of the search results, it must be well-optimized and be useful not only for the user, but also for search engines.

Search bots respond to certain signals - external and internal optimization factors. And if it is extremely difficult to influence external factors, then it is less and less clear with internal factors.

Internal optimization factors are factors responsible for the quality of the site, due to which the information on the site becomes more relevant, and the resource itself rises in the search results.

Page and site optimization can be of two types: technical and content. To do this, an internal seo audit is carried out, which shows the problems the site has.

Technical optimization

Responsible for fast loading, correct display of the site on different devices and for setting up indexing. Step by step:

Setting the Last Modified Header

It is needed to tell the client about the last page change. If the user received a Last-Modified header, then the next time they access the address, they will add an If-Modified-Since question (whether the page has changed since the date received in Last-Modified). The server, upon receiving an If-Modified-Since request, MUST check the received timestamp against the time the page was last modified. If there were no changes on the page, then the response will be 304 Not Modified.

This saves a lot of traffic, because after sending the 304 Not Modified code, the server will stop transmitting data. There is a reduction in the load on the server up to 30%. This is true for sites with high traffic and constant updates (resources with vacancies, broadcasts).

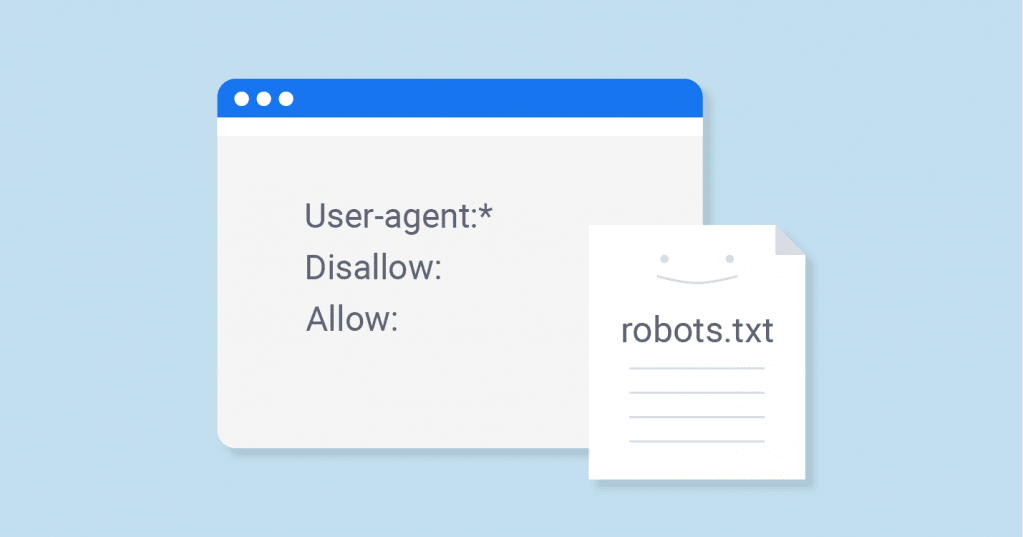

Installing robot.txt

Here you need to set specific scanning parameters for robots. The file specifies sections that need and do not need to be visited by robots. In addition, you can install in this file:

- clean-param (indicates to the robot that the site contains parameters that do not need to be taken into account when indexing);

- сrawl-delay (responsible for the minimum period of time between the end of loading one page and the start of loading the next one);

- sitemap (sitemap address).

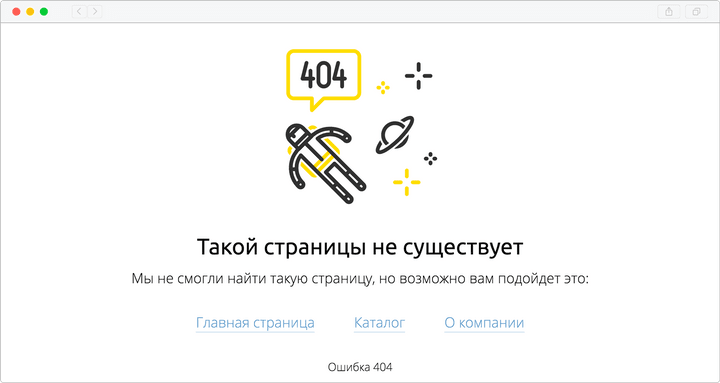

Correct display of non-existent pages

Indexing such pages will negatively affect indexing and user behavior.

When going to a non-existent page, the user is shown information about a 404 error. There are two reasons for its occurrence:

- The page has been removed from the site.

- Address change.

In order for visitors not to have a negative attitude towards erroneous pages, their correct design is very important: indicating links to the main page and other sections.

If most of the pages on the resource are erroneous, this will lead to a decrease in positions in the search results. Especially often online stores face this problem, since when deleting a product card, the link to it is still active. Also, Bitrix CMS users face difficulties with 404 errors. A page can be active but give a 404 status.

You can identify errors through the panels of search engines or scanner programs. To avoid them, it is recommended to set up code 410, and the robot will immediately delete unnecessary sections. Another option is to redirect to a new address.

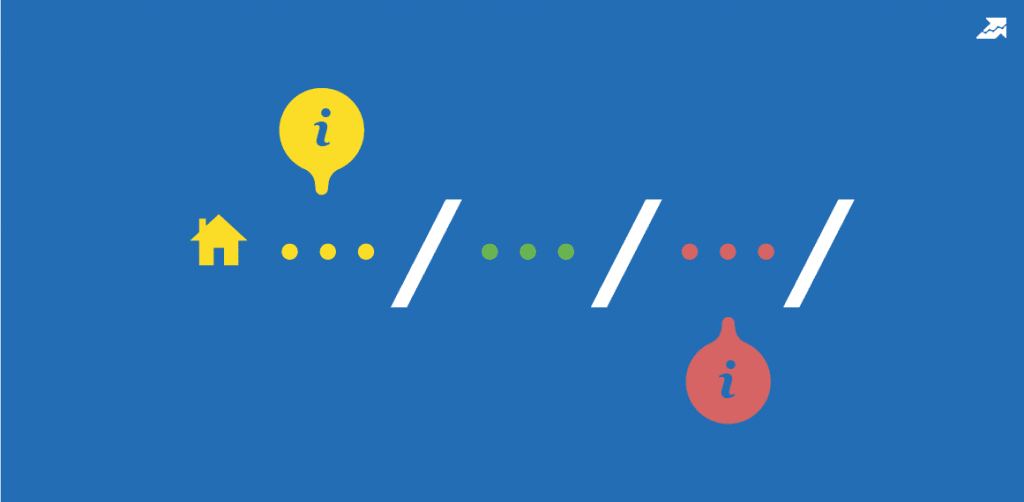

URL generation

The web address of a page should reflect what is on the page so that users can navigate where they are. In addition, the formation of the correct address helps search engines understand the topic of the content.

To do this, you need to create a CNC (human-understandable URL) and observe the correct nesting of pages. It is recommended to implement no more than three pages by nesting. So that the user can get to any page of the site from the main page in two clicks.

To correctly set the url you need:

- Respect the hierarchy in the construction of the address.

- Do not make the address too long.

- The structure must match the name in the menu and in the breadcrumb.

- All addresses must be unique.

- A few keywords should be added to the web address for better promotion of the page.

Remove duplicate pages

Duplicate pages have a negative impact on page rankings. Despite the fact that they do not play any role for ordinary users, duplicates interfere with the correct indexing of pages.

Problems that may arise:

- Difficulty identifying relevant pages.

- Complication of search engine optimization. Robots skip some pages when indexing.

- Loss of link weight.

- Sanctions from search engines for plagiarism (because it is identical to duplicate pages).

You can check availability using special services or panels from search engines (Yandex.Webmaster and Google.Webmaster). In addition to deletion, you can redirect (redirect) to the desired address.

Usability

A metric that reflects the simplicity and usability of the site. To do this, the resource must be with a clear interface so that the user can easily navigate the pages. Usability is responsible for the time spent by the user on the site and whether the consumer will return to the resource again.

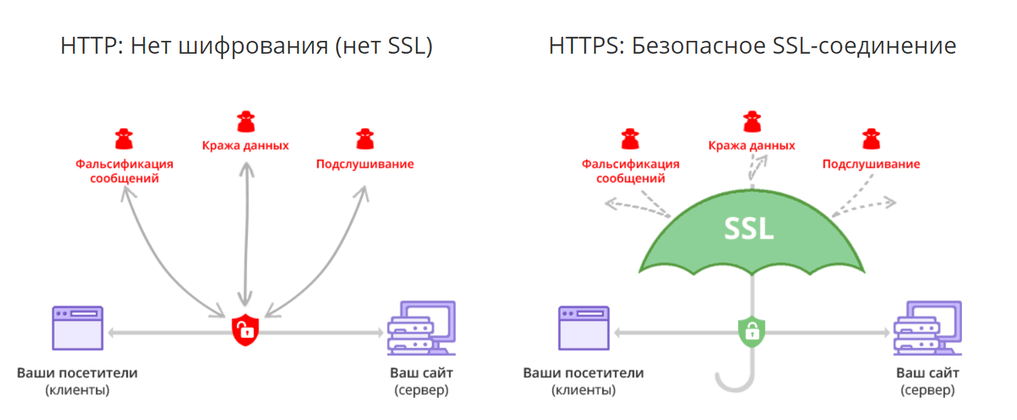

Installing an HTTPS certificate

Transmitting data over the network using an SSL connection. This cryptographic protocol helps to ensure secure communication and protection of transmitted data from interception. This is especially true for sites with personal, payment and commercial information. For example, social networks or online stores. In addition, the presence of secure data transmission increases the trust of search engines in the site.

Customizing the navigation chain

The navigation chain or breadcrumbs is the main element of site navigation.

The navigation chain is needed for all sites where there is a logical nesting of sections. These are information sites, online stores, service sites, corporate sites, etc.

With the right setting of the breadcrumbs, the visitor will be able to navigate where he is and, if necessary, return to the previous section.

Breadcrumbs have two functions:

- Positive effect on SEO.

- Improve website usability.

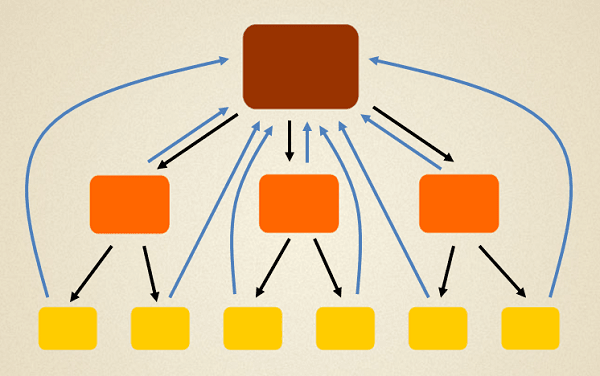

Setting up internal linking in optimization

It is carried out to distribute the link weight and increase the relevance of pages.

Sometimes:

- Navigation (quick transition from section to section).

- Contextual (placement of links in the text).

- Straight through (links to main pages).

- Useful links (recommended products block).

With linking:

- the usability of the site is improved, as the user navigates the sections better and faster;

- indexing speeds up, as search bots find the information they need more quickly;

- competently distributed and increases the weight of the pages;

- ensures the coherence and integrity of the site;

- Pages are promoted by medium and low-frequency queries.

Competently built linking is the key to successful website promotion. It has many nuances, in addition, search engine algorithms are constantly changing, so it is important to constantly improve and update schemes.

Creating microdata

With the help of it, search engines process information for convenient presentation in the search results. There are 4 types of relevant microdata:

- Open Graph. Developed by Facebook. With its help, a site preview is formed in social networks. Now used by companies such as Yandex, Vkontakte, Google+, WhatsApp, Viber and Telegram.

- Schema.org. Used by major search engines (Yandex, Google). Thanks to this micro-markup, you can place almost all elements and blocks on the site. It is one of the most popular markups due to its versatility.

- Microformats. Just like schema.org. allows you to get an expanded snippet in the search results. The main difference is fewer objects and properties.

- Dubline Core. This is the markup standard for the World Wide Web. Since 2011, in Russia it has been subject to the state standard GOST R 7.0.10-2010. Used for the entire page.

Thanks to micro-markup, the site:

- will increase its position in the search results;

- will show a high amount of information about the resource;

- will increase the number of sections of the site that you can go to immediately from the search.

Adaptive version of the site

A functional web page is being developed that can dynamically adjust to different screen formats. The site will be displayed not only on computers, but also on other devices (phones, tablets).

Having entered the site, users want to get a convenient platform with an intuitive and user-friendly interface, without additional scrolling and image enlargement. If potential customers have difficulty accessing a resource from a mobile device, they will go to competitors.

Responsive version benefits:

- Increased competitiveness.

- Expansion of the audience (attraction of mobile traffic).

- Improve the ranking and raise the ranking in the search results.

Increase page loading speed

The faster the site loads, the more likely it is that the user will not leave the site, and search engines will better index it. The optimal loading time is from 1.5 to 3 seconds. There are many services for checking speed. The most popular of them:

- PageSpeed Insights. A free tool from Google to view your average page load speed.

- Pingdom Tools. You can conduct a quick analysis of the site, and identify problems in detail.

- GTmetrix. You can analyze the download speed in dynamics.

Site registration on webmaster panels

With the help of the panels, you can check the errors and sanctions that have been imposed on the site. In addition, you can use a set of tools to optimize and improve indexing.

Most popular - Yandex Webmaster and Google Search Console.

Yandex.Webmaster helps you get detailed information about the operation of a resource, analyze statistics, and track possible errors.

Among its many functions, the main ones can be distinguished:

- Exercising control over the placement of external links.

- Checking robot.txt files.

- Adding a sitemap (sitemap file).

- Check and view indexing errors.

- Adding a new resource.

- Site management (collection, modification of information).

Google Search Console is a free service for obtaining information about the ranking of a site in Google search results. With its tools and reports, you can:

- Estimate traffic.

- Detect indexing issues.

- View sites linking to the resource.

- Make sure the search engine sees the site.

- Fix issues with AMP pages.

- Receive problem and spam notifications.

Website optimization for promotion

After technical optimization is complete, you can start optimizing for promotion.

Semantics (keywords)

This is a list of words that most accurately describes the content of the site and its type of activity. By keywords, the user most often searches for the information he needs. When compiling a set of keywords, you need to take into account the region and types of frequency.

Depending on how many times a keyword or phrase is searched by users in a search engine, a distinction is made:

- High-frequency queries are the most popular for this area. For example, a capsule coffee machine.

- Mid-frequency - used more often than low-frequency, but less often than high-frequency. For example, the capsule coffee machine Dolce Gusto.

- Low-frequency - phrases that specifically define what the user needs. For example, the Dolce Gusto capsule coffee machine Krups model.

In order to choose the right words that reflect the user's interest in the subject of the site, the Yandex.Wordstat service is used. It can be used for:

- Keyword selection when launching contextual advertising.

- Analyzing the popularity of search queries.

- Collecting a semantic core for SEO website promotion.

- Analysis of the seasonality of demand for a product/service.

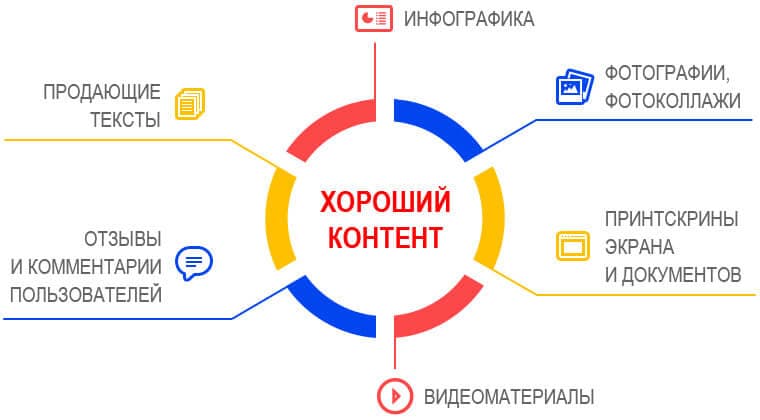

Graphic content

Graphic content is the properly configured images on the site. Must include a filename that contains the keywords. Also, the photos on the site must be of high quality and match the text.

Graphic content will help readers better understand the information and explore the product. Images and photographs give life to the text and show examples clearly.

It's best to create your own graphic content with the logo, rather than taking someone else's photos. This will increase brand awareness and increase user trust. After creating the material, do not forget about its digital protection, for example, with the help of watermarks. This will avoid plagiarism from competitors.

Content

Filling the site with information for which the user came to the resource. The content must fully comply with the user's request. For competent filling of the site you need:

- The text was unique.

- The text was composed without grammatical and punctuation errors.

- The information was useful and interesting.

- The information was structured (using lists, paragraphs, headings).

Tags

Seo tags (title, description, headings) must be filled in correctly on the page. Title contains information about the content of the page.

Title requirements:

- Uniqueness.

- The main promoted request must be at the beginning of the tag.

- Title should be natural, without spammy keyword phrases. For example, to buy women's clothing Moscow inexpensively is an incorrectly composed headline. Correct: Buy inexpensive women's clothing in Moscow.

- Must be concise and short.

- Contain keywords.

- One key occurrence must not be repeated more than twice.

- The optimal length is 60-80 characters, the maximum is 150.

- Keywords can be in free form, or you can use synonyms or keywords from another query group.

- Attract users' attention.

- Title should not contain punctuation marks (periods, exclamation marks, and question marks).

Description - a short description. Must include keywords and be unique.

Basic requirements:

- Uniqueness.

- Must not duplicate title.

- Should include 1-2 meaningful sales and promotional offers.

- It is advisable to use facts, evidence base, numbers to attract attention.

- Must include several key phrases, no spamming.

- The optimal length is 170 characters, the maximum is 250-300.

Headings- denoted as h1, h2, h3 and so on. H1 is the most important heading, the rest follow the hierarchy. It must use the main keyword, or its word form. The number of words is from 7 to 10. It is desirable that the titles do not duplicate the title.

Commercial Factors

Commercial factors of the site are those elements and features of the resource that most affect the trust of buyers.

Include:

- feedback form;

- contact information;

- diagram or map;

- reviews;

- company section.

Depending on the specifics of the business, there may be more or less of them. For example, the factors that influence the purchase of goods in an online store are different from those on a service site. In the online store, more attention is paid to the convenience of shopping (detailed description, online chat, buy button, place an order in a couple of clicks, etc.).

If we are talking about a service site, the most important thing is a detailed description supported by an evidence base (reviews, expertise, etc.).

In order for the site to be more authoritative and increase its ranking in search results, it is recommended to constantly improve and supplement commercial factors. Complete and transparent information about a business, product or service has a positive effect on the behavioral factors of the site.